The National Assessment of Education Progress is Flawed

The National Assessment of Education Progress, also known as the Nation’s Report Card and as NAEP, is flawed as a tool of education policy.

Recently, a fellow education reform advocate asked for feedback about an article in the New York Times lamenting the unprecedented declines in NAEP scores for 9-year-olds.

To quote the article:

‘“This is a test that can unabashedly speak to federal and state leaders in a clear-eyed way about how much work we have to do,” said Andrew Ho, a professor of education at Harvard and an expert on education testing who previously served on the board that oversees the exam.’

End quote.

It appears to me that there is an erroneous model at work when test scores are touted as significant feedback for policy makers.

The model suggests that there is a premise assuming that teachers teaching students CAUSES those students to have some kind of test score.

Based on that premise it follows that if the policy makers can intervene in just the right way, they can CAUSE the teachers to do a better job of teaching and thereby CAUSE the students to have better test scores.

A nice row of causal dominoes, but I’m skeptical.

An Analogy for the National Assessment of Education Progress

Hi, my name is Don Berg.

I'm a psychologist who specializes in motivation in educational settings.

Both common sense and decades of research suggest that motivation matters to learning.

Yet, no data on the factors relevant to motivation are included in the NAEP data.

Let me suggest an analogy to illustrate why this is such a problem.

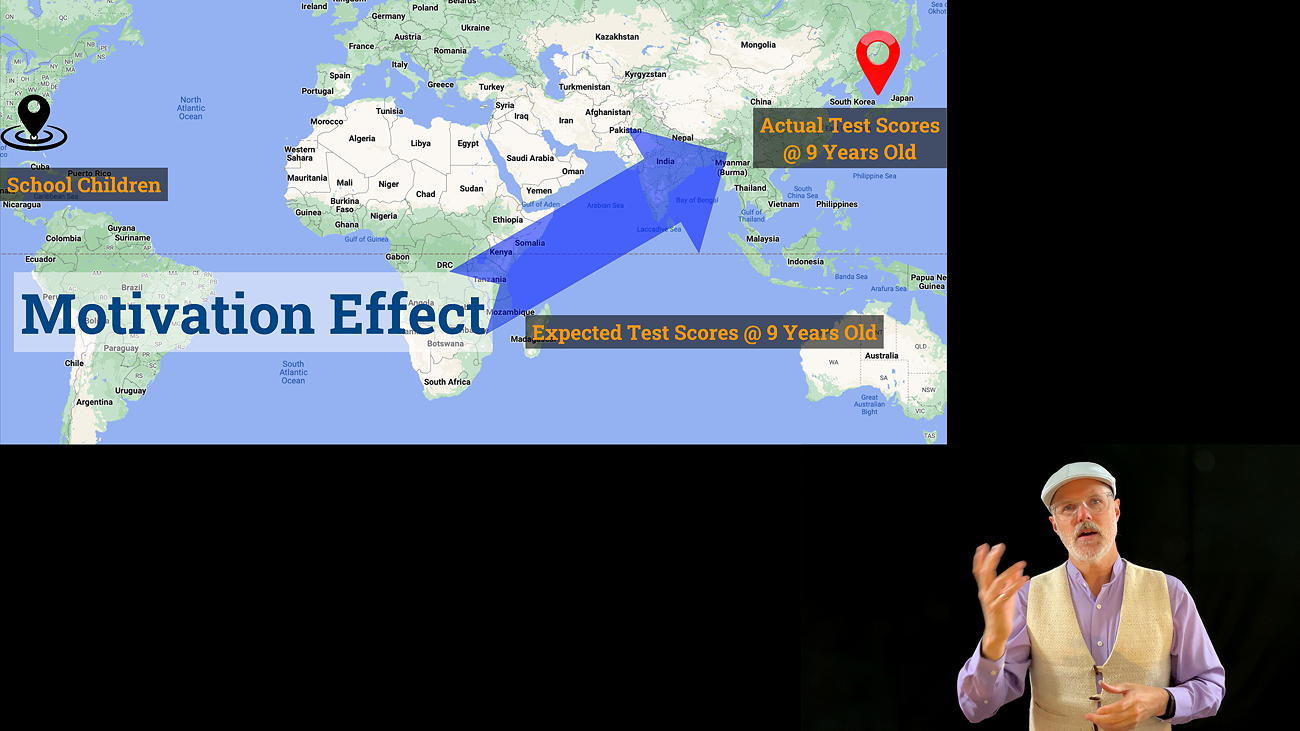

Imagine it is 1950 and NASA is just starting to develop their first rockets.

They launch a rocket to go up to the upper atmosphere and then land in the Indian ocean.

But, instead it comes down in the Sea of Japan.

Even though their computers had calculated a perfect trajectory, something clearly went wrong.

When they check all the systems on the rocket itself, there is no problem.

The rocket had precisely followed the navigational sequence that had been calculated.

What could have happened?

As they are scratching their heads a junior engineer realizes that very strong winds were blowing that day, so maybe that had something to do with it.

The junior engineer gets detailed analyses of the wind for that day in the relevant places and discovers that when the wind is factored in then the corrected calculations match the actual trajectory of the rocket to a ’T.’

At the end of his powerpoint presentation all the senior engineers slap their foreheads with a hearty laugh and the junior engineer gets a promotion.

Of course rocket scientists who don’t anticipate the effects of wind should be considered incompetent, but remember that this absurd scenario was constructed as the basis for an analogy.

So, the New York Times article portrays a bunch of experts lamenting the fact that the NAEP scores for 9-year-olds did not meet their expectations.

They are scratching their heads, like my fictional engineers were, as they try to figure out why their expectations failed to be met.

The instructional system should have done a better job of getting those kids to produce higher scores.

Now they are mystified by the fact that the reality of the situation did not match their systematic approach to getting the kids to produce certain test results.

It might strike you as obvious that motivation matters to learning, yet from a motivation perspective there is a pervasive lack of measures to reflect what is happening in schools.

The wind is an important part of the weather so in my analogy the equivalent is motivation as an important part of classroom and school climate.

The relevant measures would include patterns of motivation, psychological need satisfaction, and degrees of engagement.

Together they are what I call experiential data.

The experts reflecting on NAEP scores are aghast that they have changed in such a negative way and are ready to call for policy changes.

But, the NAEP data model takes no account of the classroom and school climates in which those 9-year-olds are making their learning journeys.

I've conducted a little bit of research in my time.

One of the most basic lessons I learned during my training was that everyone has (even if only implicitly) a model of what they think is going on.

Models are necessarily simplifications of reality, but a good model helps you do useful things like make predictions and change outcomes.

I was taught that one of the most important things a researcher must do is test their models to ensure that they are appropriate to a combination of the purposes being served and the reality in which those purposes are being pursued.

Here’s where the rocket analogy breaks down.

The Breakdown of the Analogy for the National Assessment of Education Progress

The reason that NASA has never had a problem with the wind is because they use real-time data to manage the effects that the wind will have on their rockets.

The NAEP process only measures certain outcomes; it does nothing to portray the path that got the 9-year-olds to the point they measured.

In particular NAEP is completely blind to the effects of motivation.

In my new book, Schooling For Holistic Equity: How to Manage the Hidden Curriculum in K-12, I propose an alternative to the academic delivery model that appears to be dominant in the mainstream school system.

My model for learning is based on mental mapping and navigation which incorporates motivation.

I suggest that we need a different kind of information that can better help teachers and principals to improve their classrooms and schools.

In a nutshell, we should collect what I call experiential data several times each year so that teachers and principals have direct feedback about the quality of their classroom and school climates.

My model of experiential data is based on the science of Self-Determination Theory, which is the most thorough and well-supported explanation of human motivation available today.

The flaw in the National Assessment of Education Progress as a policy tool is that it is blind to the experiences of students.

As long as the NAEP system fails to control for the patterns of motivation, levels of need support, and degrees of engagement of the students, it will continue to be as useful as a rocket trajectory that does not account for the wind.

Until the NAEP testing process is guided by a model of learning that incorporates proper controls for classroom and school climate it will not be as useful as it could be.

You can learn more about experiential data and how it can become a valuable part of school and classroom management in my video entitled, “How Education Policy is Maintaining a Market Failure in K-12” on my site Holistic Equity DOT org, under the “Policy” tab.

Thanks for watching.

This article was printed from HolisticEquity.com